Routing Multiple Domains using HAProxy (HTTP and HTTPS)

Routing to multiple domains over http and https using haproxy. The SSL certificates are generated by the hosts so haproxy doesn't need to have anything to do with that, this makes for a super easy setup!

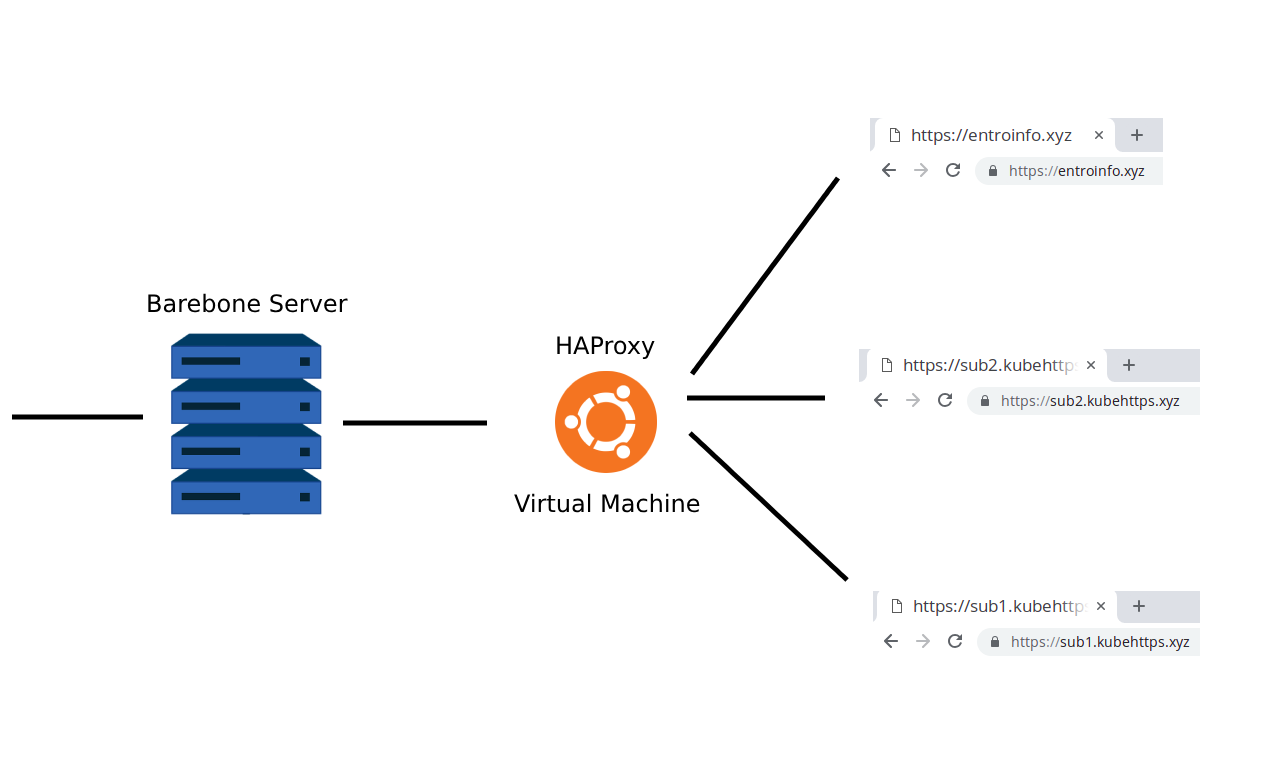

Some projects that we work on require us to setup the system on a barebone server as opposed to cloud infrastructure. We use KVM to install virtualised systems on the server for easy migration and a number of other factors. When we do this, we often have multiple domains and sub-domains pointing to the same server and we need to route the traffic to the correct virtual machine.

There are thousands of ways to route traffic, but I was looking into using HAProxy to do it. HAProxy is a great load balancer and has fantastic performance. When it's all about routing network packets to the right server, this is one of your best options.

But how do we route both HTTP and HTTPS traffic without HAProxy needing any certificates? This was the million dollar question, is it possible to route traffic on port 80 and 443 without any SSL requirements on the routing server (ie the host that serves the site generates the SSL certificate).

This guide runs through the setup and configurations of HAProxy to get this working where all domains enter at the same point but systems that serve up the sites are all on different hosts. This is my setup,

+--------------------+

+--> | sub1.kubehttps.xyz |

| +--------------------+

|

| +--------------------+

+--> | sub2.kubehttps.xyz |

+-----------+ +--------+ | +--------------------+

| main host +------> | router +----+

+-----------+ +--------+ | +--------------------+

+--> | entroinfo.xyz |

+--------------------+The main host is the entry IP that is the same for sub1.kubehttps.xyz, sub2.kubehttps.xyz, and entroinfo.xyz.

The main host then passes all traffic to the router, this would be a little Virtual Machine (VM) that directs traffic to the correct hosts. Ideally, you'd want DNS set up here to name each host. For this example, I set this up in the /etc/hosts file.

The Main Host Setup

The main host would be your barebone machine. We want as little setup on this as possible to avoid reliance on it. Ideally, we'd want to take our virtualised environment and move it to a new server and setup should be almost instantaneous. The first step is to install haproxy,

sudo apt install haproxy -y

Once that is installed, we can set up the HAProxy configuration at /etc/haproxy/haproxy.cfg to forward all of the port 80 and 443 traffic to the router VM,

As mentioned previously, I've set up router in the /etc/hosts file to point to the router VM IP address.

That's all we need to do, just restart HAProxy and you're good,

sudo systemctl restart haproxy

The Router Host Setup

The router is configured to direct traffic to the correct host based on the domain. I've set up the hosts file on the router host as follows,

These were real IP addresses of hosts that I started up (temporarily) on vultr.com for this demonstration.

On a side note, I honestly think that vultr.com is one of the best cloud host providers out there. It's definitely worth it to take a look when you're trying to find a cloud provider!

We now need to set up HAProxy on this system and forward traffic correctly. This is how my /etc/haproxy/haproxy.cfg looked,

What you'll notice here is that I bind to port 80 using mode http but I bind to port 443 using mode tcp. This is to avoid the need for certificates on the 443 bind. Basically, what I'm doing here is routing 443 to a host and I expect that host to have the certificate set up.

You might also notice that at the moment I'm not load balancing any of the servers. But this would be pretty straight forward, you'd just add more servers in the backend configurations above.

Finally, you'll see my naming in the backend entries looks like this,

server server3 server3:443

The middle name, server3, can be any name you want, usually you'd make this quite descriptive to make the config more readable.

Cool, we're ready with this config so just run,

sudo systemctl restart haproxy

And we're good to go!

The Web Host Setups

The final step is to set up the web hosts to serve up traffic and to generate the https certificates using certbot and letsencrypt.

I created a little "hello world" repository on GitHub that installs docker and runs a mini express.js server. This was just set to serve up the "hello" message on port 3000 on that host.

The next step was to setup nginx to pass traffic from port 80 to port 3000.

sudo apt install nginx -y

I then edited the /etc/nginx/sites-available/default and set it to,

You're free to change this to meet your needs. In my case I was hosting this stuff on port 3000, yours might be different. It's good to set the expected server_name here so that the forwarding only happens for that domain name (the rest would just get a 404 from nginx by default).

Restart nginx with this new config,

systemctl restart nginx

Now we need to install and run certbot,

When running certbot it'll ask you if it should allow port 80 or redirect to port 443. This is up to you but in my case I chose the redirect (option 2).

This changes the /etc/nginx/sites-available/default config as follows,

Not only does it update that config, but it automatically sets up a cron job to automatically renew the certificates before they expire.

For the sake of completeness, the setup for sub1.kubehttps.xyz was very similar, my nginx config looked like this,

And the certbot installation and command looked like this,

You're all set!

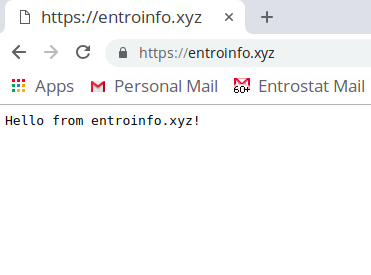

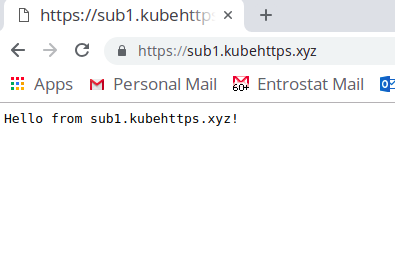

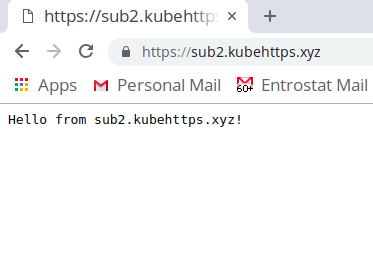

All of the systems have been set up correctly and your router config knows where to send traffic. So you're done and ready to test :) In my case, I visited each domain from my browser,

The IP address for entroinfo.xyz, sub1.kubehttps.xyz, and sub2.kubehttps.xyz was the same but all of them were routed to different hosts using HAProxy. The use of nginx as a reverse proxy is optional but I don't see a downside here, it's super easy to set up and there are tonnes of resources online on using nginx for pretty much anything!

Conclusion

So there you have it, it's possible to use HAProxy to route HTTP and HTTPS traffic to different hosts. This allows for easy setups of multiple domains on one host machine where each domain is a new VM or different port on the current host. I hope this helps, it's certainly been useful for some of the setups at Entrostat!